Retro-ML is an open-source project which implements NEAT Machine Learning for a number of different retro video games, such as Super Mario World, Super Mario Kart, Metroid, Super Mario 64 and more.

What does Retro-ML do?

Retro-ML allows one to train Artificial Intelligences to beat various games. It was made with configurability in mind, allowing the user to configure custom objectives, or tweaking inputs/outputs to see how the AIs will act.

Development process

Retro-ML's development was split in two semesters, the first one being mostly about creating the app itself, as well as the training and play processes for Super Mario World, and the second one all about implementing as many games as possible within the app.

Semester 1

We decided on NEAT machine learning due to not being many exemples of it being used in video games out there. While we were aware of SethBling's Mar/IO project also being Super Mario World machine learning using NEAT, we felt like we could make an app that feels more complete, and that would train AIs way faster using multithreading and multiple emulator instances. We also focused greatly on the configurability of the training, allowing the user to setup the training sessions however they want.

This semester was all about Super Mario World, and back then, the project was called SMW-ML (or Super Mario World - Machine Learning). We quickly implemented the emulator communication script, and managed to have a working training session (with multiple emulator instances!) within a week. All the AI was fed were the static tiles around it, as well as some state variables (is Mario touching the ground? is Mario in water? etc.). Once that initial was done, we worked pretty hard the rest for the rest of the semester to make the app better and better, and fetch more and more information from the game to feed to the AIs.

By the end of the semester, we could train AIs to beat a lot of different levels, although generalization was really hard to achieve, and mostly only worked on really simple left-to-right levels without too much waiting.

Semester 2

The second semester was all about making the application support additional games. Minor improvements were made to the main application, but outside of those, almost all of our dev time went into the implementation of new games. With an additional teammate, the goal was to try and implement 10 games in total, whilst also making it as easy as possible for outside developers to implement games themselves.

Quickly, the application was modified to use external plugins for all console and game support, as I thought it would be the easiest way to decouple everything. And so, it is possible to support games without having them be in the actual solution, as long as they implement the proper interfaces.

After that, work began on implementing new games. I ended up working on Super Mario Kart, Metroid as well as Super Mario 64, whilst my teammates worked on the other games. Our attention being spread between a lot of different games, we couldn't put as much time on each game, meaning they wouldn't be as feature-complete as with Super Mario World. In the end, we implemented a total of 9 games, being 1-off from our goal.

As our project was an university project, we were graded for it, and I'm proud to say we got a perfect grade (A+) for both semesters, even if we didn't manage to do everything we wanted in the end.

Training sessions

This section will include videos for all the different AIs we've been able to train and my comments on them.

Super Mario World

The video at the start of the article already shows off the progression of AIs in Super Mario World, but here are some additional videos of interesting AIs we've been able to train.

This AI does a pretty interesting shell kick to clear up the enemies in front of it, then goes on to beat Yoshi's Island 2.

This AI uses the age-old technique of going above the entire Star World 2 water stage instead of trying to beat it regularly.

While this AI does not beat the level (back then, the AI couldn't see dangerous tiles, such as spikes), it does some pretty crazy maneuvers to avoid get hit while underwater.

An AI beating the Yoshi's Island 1 level pretty quickly.

Super Mario Kart

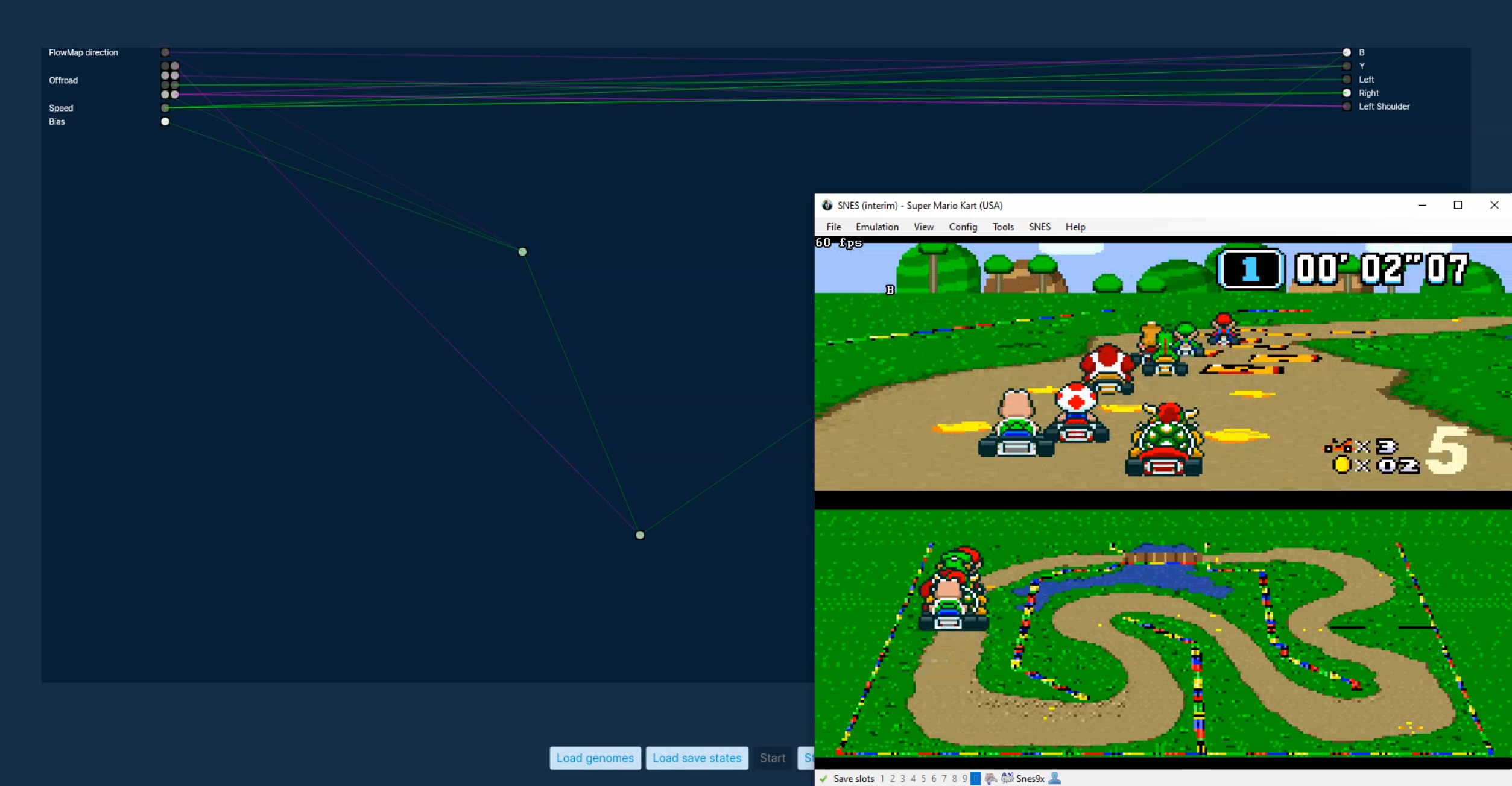

Super Mario Kart was the second game to be implemented in the application, and makes for some pretty interesting behaviours as you train AIs to beat races.

Machine Learning evolution

Here's a video showing off how AIs learn a track in Super Mario Kart

This AI just loves respecting the speed limit, and thanks to that, it takes as much time as it can to beat the course.

This AI was trained on Rainbow Road, and learned that going offroad meant falling into a pit and losing lots of time. So when it races on different tracks, it tries its hardest to never ever go offroad.

This pretty impressive AI beat Rainbow Road without ever falling off, however it does hit a thwomp two times during the race.

Super Mario Bros

Here's an AI that's mastered 1-4, using Raycasting instead of regular tiles as input

Alternatively, here's a pretty similar AI that simply uses the tiles around itself as input. Note that for this AI, the neural network had too many connections to render within the app.

Metroid

Metroid was a tough game to implement, since the game is non-linear. The AI is guided through a path it needs to follow to get to the upgrades it needs. Here's an AI that does a decent job at following said path.

Here's some additional clips for Metroid of interesting/funny AIs

This AI just does not know how to get past that single enemy, and ends up almost dying to it.

This AI takes a long time to line up a jump to get to the powerup, but eventually just fails the jump.

Tetris (GBC)

Tetris was a tough challenge and never really worked properly, due to the AI having to both figure out the rules of the game, and how to move the pieces. Should we have started the implementation of the game with the knowledge we now have, we would have made it so that the AI does not need to figure out the controls, just tell us where it wants the pieces.

Street Fighter 2 Turbo

The AI is quite good at Street Fighter, and we managed to make it play against real players during our university presentation. Against actual humans, the AI is decent, though it gets beaten pretty easily by people who have experience in playing traditional fighting games.

Pokemon Yellow

Training an AI to play Pokemon is a much bigger challenge than we initially thought. There are a lot of special moves with special effects you need to keep track of. The biggest challenge was translating everything that was happening in game to inputs the AI could parse. The AI's ouput is quite simple, being a total score it assigns to each move.

Super Bomberman 2

Bomberman is sadly a failure at the moment. The AIs trained by our program just simply do not want to move or play the game, since placing a bomb means the AI also needs to move out the way to survive, and so, it just ends up doing nothing at all times. A way to fix this would maybe give points to the AI when it places a bomb, but remove those points if it dies to it. Had we had more time, we could have fixed up Bomberman a bit more.

Super Mario 64

Super Mario 64. The 3D game of the bunch. This brought a bunch of challenges while figuring out how the AI should see, how its inputs should work, and more. Had I had more time to work on this game, I would have figured out a way to make inputs separate from the camera, just so the AI has less trouble navigating. On top of that, the rays being cast around the AI for its vision aren't great, since there are the same amount of rays per row, no matter the vertical angle, meaning there are multiple wasted rays for the straight up and straight down directions.

Future goals

I want to continue maintaining our application throughout the years, and hopefully have people interested in contributing on the repo, or even use it for their own machine learning implementations. I can't afford to put as much time into the application as I did during university however, so new features or even game implementations will be slow-coming. One of my first objectives will be to fix-up some of the games (such as Tetris and Super Bomberman) for better training, and I'd love to eventually work on Mario Kart 64 machine learning, however this would require some good documentation of how the game works internally.

In any case, I loved working on this project, and I hope it'll contribute even more to machine learning in video games in the future.

Special Thanks

- MrL314, for the great Super Mario Kart RAM documentation.

- DataCrystal and its contributors, for all the RAM maps which allowed us to save time implementing each game.

- Pannenkoek2012 and the STROOP contributors, for the great Super Mario 64 documentation.

gNWTbCyiynhNPHYYN

OVSqNwCLovFLlAqtdjZmfqY